SQA-001

[Paper] Classifier-Free Diffusion Guidance

Classifier-Free Diffusion Guidance

Classifier Guidance

原论文 Diffusion Models Beat GANs on Image Synthesis

在 conditioned diffusion 生成中使用一个 pretrained classifier, 是 post-training 的改进生成的方法

其中把每一步的 score estimate 和 classifier gradient 结合起来更新一步 \(\tilde{\epsilon}_{\theta}(z_{\lambda}, c) = \epsilon_{\theta}(z_{\lambda}, c) - w \sigma_{\lambda}\nabla_{z_{\lambda}}\log p_{\theta}(c\mid z_{\lambda})\)

- 该方法可以有一个 guidance 的系数 $w$, 用于控制 classifier 的影响程度

- Improve Inception score, but decreased diversity

- 实际上等价于在 \(\tilde{p_{\theta}}(z_{\lambda}\mid c)\propto p_{\theta}(z_{\lambda}\mid c)p_{\theta}(c\mid z_{\lambda})^w\) 分布中采样

缺点:

- 必须额外训练一个 classifier, 并且必须要在 noisy data 上训练

- whether classifier guidance is successful at boosting classifier-based metrics such as FID and Inception score (IS) simply because it is adversarial against such classifiers

方法概括: mix the score estimate of a conditional diffusion model with a jointly trained unconditional diffusion model.

Technical Details

- 论文中使用的 noise schedule 是 \(\lambda = -2\log\tan(au+b)\) where $u$ is uniform.

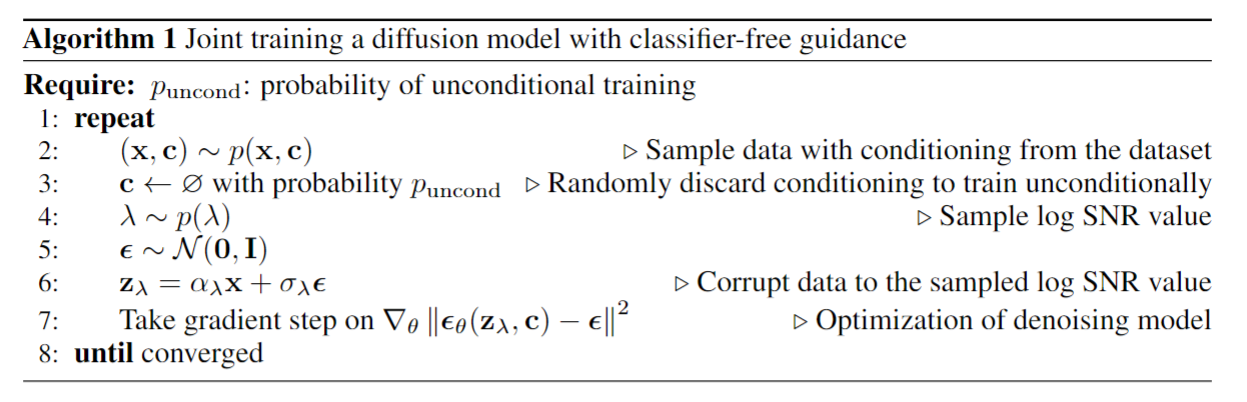

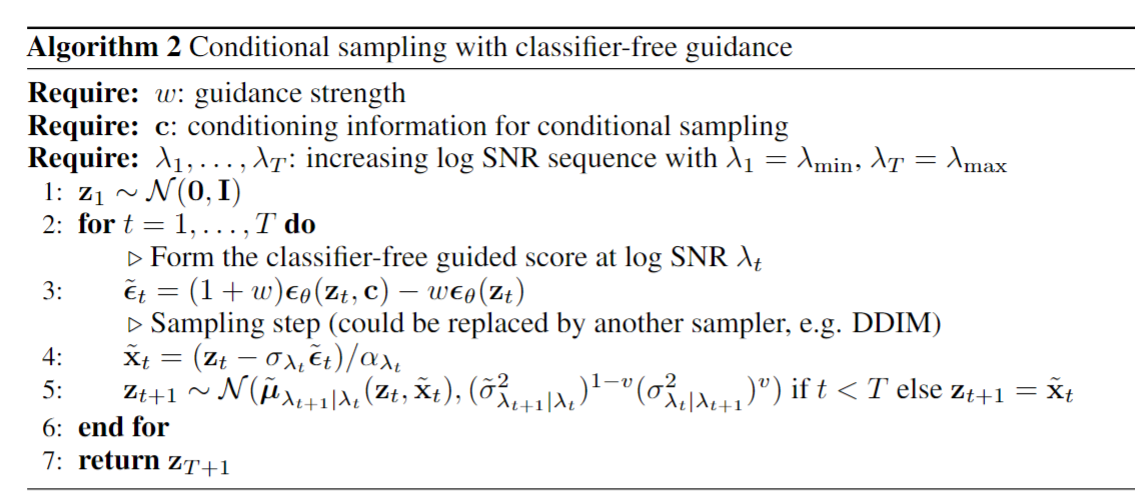

- Training and sampling process

其中使用同一个网络 $\epsilon_{\theta}(z_{\lambda}, c)$ 学习, 对于 unconditional 的 $c$ 使用 null token.- TODO: 具体使用什么架构来 condition on $c$?

- $p_{\text{uncond}}$ is a hyperparameter

- 其中 $\mu, \sigma$ 的公式可以在论文的 Background 部分中找到

- 这里的想法是在数学上做到和 classifier guidance 等价的分布

- 模型架构等和 classifier guidance 论文一样, 但是训练使用 continuous time training (即 noise level 是连续的而不是离散的)

详见论文

Experiment & Conclusion

When $w\sim 0.3$, the model achieves best FID score; After that, FID decreases but IS increases.

Interesting ideas

- An interesting property of certain generative models, such as GANs and flow-based models, is the ability to perform truncated or low temperature sampling by decreasing the variance or range of noise inputs to the generative model at sampling time. The intended effect is to decrease the diversity of the samples while increasing the quality of each individual sample. Truncation in BigGAN (Brock et al., 2019), for example, yields a tradeoff curve between FID score and Inception score for low and high amounts of truncation, respectively. Low temperature sampling in Glow (Kingma & Dhariwal, 2018) has a similar effect.

-

Previously, straightforward attempts of implementing truncation or low temperature sampling in diffusion models are ineffective. For example, scaling model scores or decreasing the variance of Gaussian noise in the reverse process cause the diffusion model to generate blurry, low quality samples

- Applying classifier guidance with weight $w + 1$ to an unconditional model would theoretically lead to the same result as applying classifier guidance with weight $w$ to a conditional model

Disadvantage

slower than classifier guidance during sampling